Identity Theft is being fueled by AI

As long as there is evolving technology, there will be evolving fraud; “Deepfake” attacks increased by 1500% in North America between 2022 and 2023.

Cybercriminals, leveraging stolen identity information, have devised sophisticated schemes, complicating fraud mitigation efforts.

Our increasing reliance on digital services for everything from banking to healthcare to government benefits demands a secure and trustworthy way to verify our identities online. Traditional methods like usernames, passwords, and even multifactor authentication are becoming vulnerable against AI-driven threats.

In a recent survey by Nationwide, 82% of consumers are concerned about the use of AI (Artificial Intelligence) by criminals to steal someone’s identity. Many industry and cyber security experts predict that AI will increase the risks of identity theft in 2024 due to the speed, volume, and sophistication of attempts to steal personal information. So, you are not alone in having some questions and concerns.

Top Reasons for Concern: a perceived increase in recent cyberattacks (55%), use of Artificial Intelligence (AI) technology (54%), and proficiency of today’s hackers (51%)

Cybercrime Familiarity: Respondents reported being most familiar with: 46% phishing, 45% malware, 44% cyber bullying; and respondents said they were not at all knowledgeable about: 53% DoS (denial of service) attacks, 51% deepfakes and ransomware.

AI and “botwriting”…

Currently, the AI tool of choice for fraudsters is the writing function of bots like ChatGPT and Bard. These AI authors allow scammers to create prose that looks and sounds as if written by a professional born and (well) educated in the U.S.

AI writing eliminates the telltale typos and grammatical goofs meaning it’s easier to trick people. The bots can also accurately mimic the editorial voice and tone of any type of U.S. source, she says, including that of financial institutions and government agencies.

According to a report by cybersecurity consultant SlashNext, this superior botwriting has helped to fuel a staggering 1,265% rise in the number of malicious emails between 2022 and 2023. Many, even most, of those nasty new messages are phishing attacks that use deceptive means to obtain a user’s email address, ID or password combination. There’s also been an uptick in smishing (phishing via fraudulent SMS text messages) and quishing (phishing via bogus QR codes).

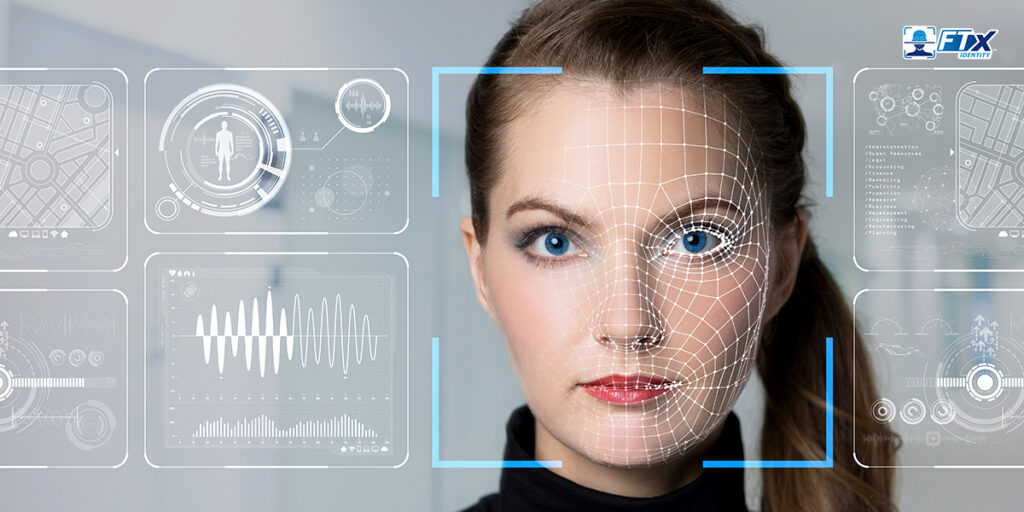

Deepfake Identity Theft

Deepfake identity theft refers to using AI-generated images to spoof someone’s likeness. This spoofing is used typically to gain access to personal data or make fraudulent purchases.

AI systems can know quickly create lifelike images of people. These images can be used in videos or to trick identity checker apps. Ultimately, deepfake identity theft is difficult to detect because of these factors:

- Realism: AI-generated images often display a high level of realism, making it tough to distinguish them from genuine images. Advanced tools like DALL-E can generate intricate textures, lighting effects, and even simulate human-like flaws.

- Fast-Paced Progress: AI is advancing quickly. Newer and more complex methods for creating realistic images are constantly emerging. Staying ahead of these developments demands continuous innovation and vigilance.

How do these deepfakes trick ID scanners? Essentially, a computer software looks for facial similarities between faces and connects them to real people. The trick takes advantage of how easily people are led to trust what they initially see. Most human eyes won’t ever detect the almost seamless video.

Unbelievable BUT TRUE !

Recently, scammers armed with AI-generated deepfake technology stole around $25 million from a multinational corporation in Hong Kong.

A finance worker at the firm moved $25 million into designated bank accounts after talking to several senior officers, including the company’s chief financial officer, on a video conference call.

No one on the call, besides the worker, was real !!! The worker said that, despite his initial suspicion, the people on the call both looked and sounded like colleagues he knew.

Scammers found publicly available video and audio of the impersonation targets via YouTube, then used deepfake technology to emulate their voices … to lure the victim to follow their instructions.

Watch the Video below…

So How Do We Protect Ourselves?

When it comes to interactive deepfakes — phone calls and videos —the simple solution is to develop a code word to be used between family members and friends/co-workers.

Resisting these scams requires following the customary steps to protecting your identity — but with heightened awareness and caution. Knowing that typos and odd phrasing will no longer easily alert you to a bank imitator, for example, means you need to verify the identity of “bank representatives” who contact you before clicking or signing anything.

Impersonations of government officials are also on the rise. In particular, any communication from a purported FBI Homeland Security agent should not be acted upon until you’ve located said person on a credible website, like a departmental directory or legitimate LinkedIn profile.

And don’t be duped by the many scammers today who imitate friends, using information stolen from social media posts and profiles, to carefully concoct a scam. Just because something sounds right doesn’t mean it’s real.

More than ever, do not trust even the personas of purported friends for any request that involves money or personal details. Rather than simply handing over your bank account number in response to a call for help, you should call your friend to verify that your online correspondent is really them (or someone they’ve indeed empowered to assist them).

Sources: Reuters, Forbes, Money, Sumsub